Context

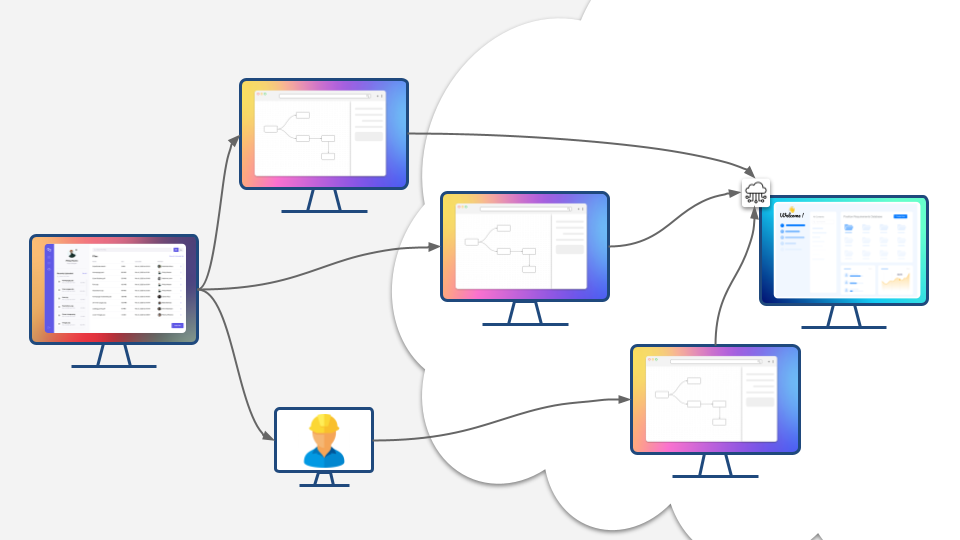

Let’s talk here about the various alternatives we have concerning the best way to install Fast2 for your migration.

However the answers brought here will match any on-prem to remote use-case.

TL;DR:

| On premise | Cloud/SaaS | Hybrid | Snowball | |

|---|---|---|---|---|

| Restricted server access | ✅ | ❌ | ✅ | |

| Performance | ✅*❌** | ✅ | ✅ | ✅*** |

| Debugging | ✅ | ✅ | ❌ | ✅ |

| Scalability | ❌ | ✅ | ✅ | ❌ |

** If Fast2 is installed on the same machine as the on-premise ECM.

*** Drive shipping time not included.

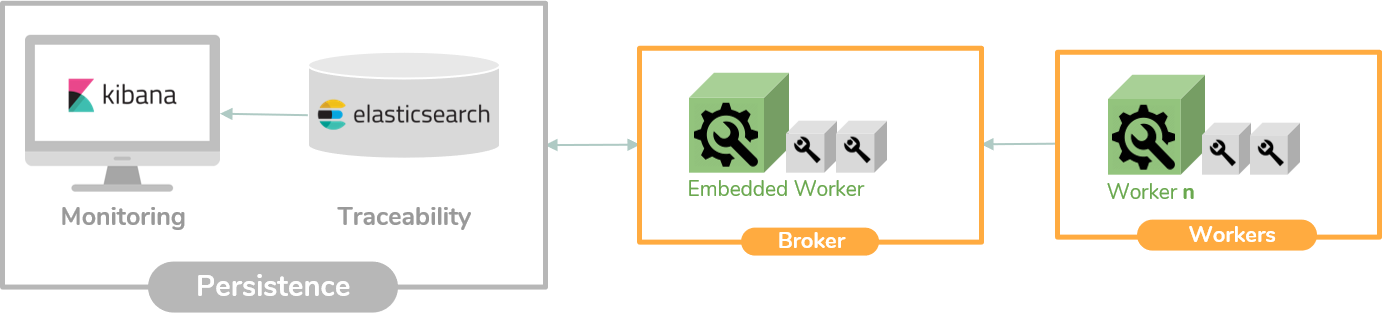

For Let’s shortly remind you the overall architecture of Fast2 :

The broker is the migration orchestrator coordinating the worker(s) to have them process assigned task(s). The two entities are interconnected and Fast2 cannot go without either of them.

We can easily identify 3 options concerning the installation:

- On premise (same internal network as FileNet for a more private entrypoint)

- Remote (ex/ EC2) with remote access to FileNet with ports open to external communication

- Hybrid : Remote broker (Fast2 installed on remote server) with local worker (accessing content and metadata directly from the same local server where FilNet is also installed).

- AWS Snowball : Loading data directly onto a Snowball drive, requiring transportation of the drive afterwards.

We’ll discuss shortly about these different approaches though 3 prisms :

- Architecture

- Performance

- Ease of debugging

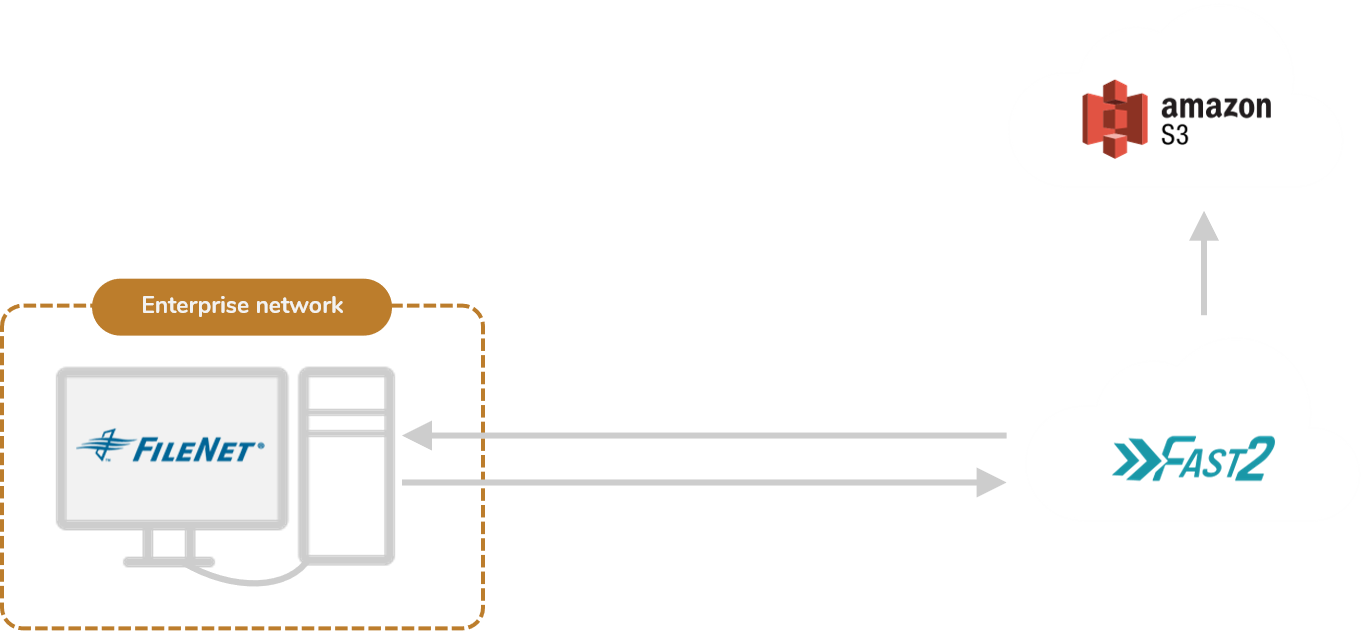

Option #1 : On premise

Architecture

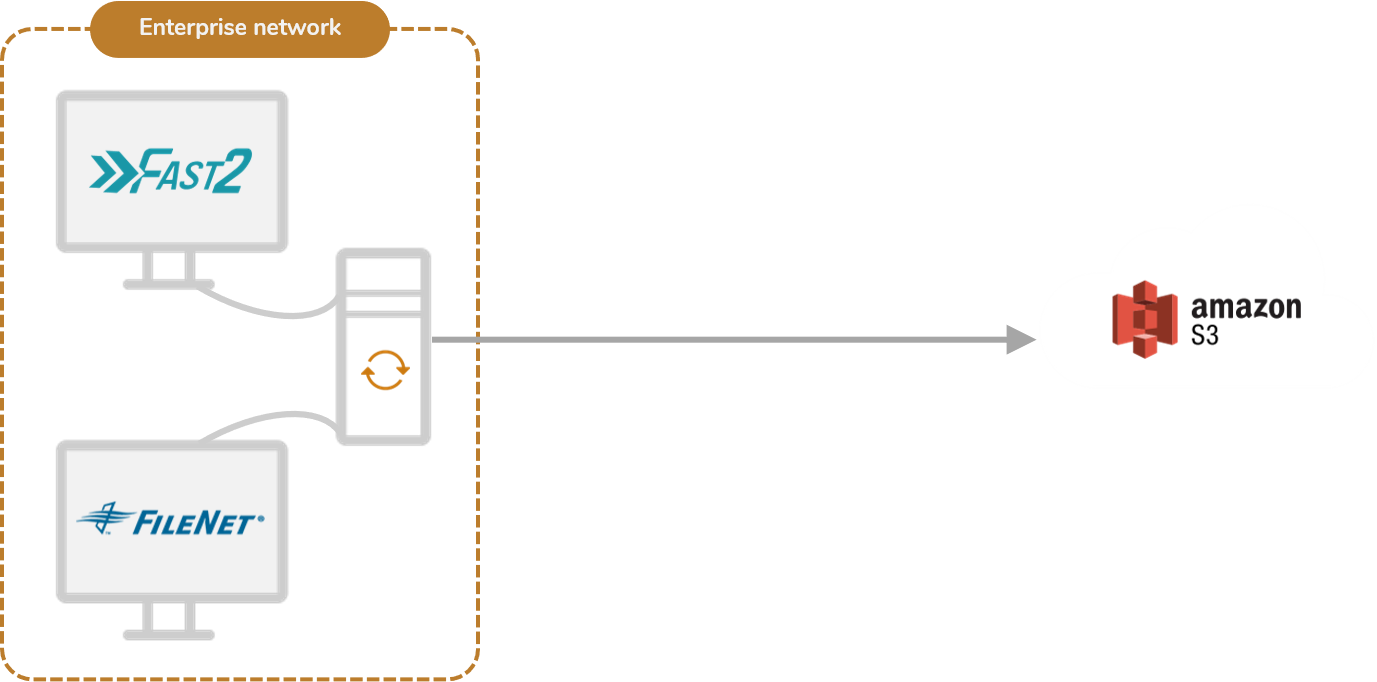

Regarding the installation of Fast2 in this architecture configuration, two sub-options can still be outlined: either the product is installed on the same server as the source system, or onto a secondary dedicated server plugged to the same internal network.

Suboption A : same machine

Installing Fast2 on the exact same environment of your source ECM system will provide a few benefits in terms of access and privacy concerns. The communication between Fast2 and the target system (in our case the AWS S3 bucket) is the only channel of output your on-prem server will be exposed to. Since our ETL relies on S3 APIs to load data and contents, the base-level of security of this communication will be closely related to what AWS makes available for such operations.

Speaking of which, Fast2 allows you to send encrypted data and content into S3 buckets as AWS APIs provide such features. Therefore the safety of your output canal can be modulated depending on your functional requirements.

Suboption B : same network

Another way of installing Fast2 on premise would be to build or use a secondary server in parallel to your current ECM system. It quite guarantees the same benefits of the previous setup, since all transactions between Fast2 and the source system will occur inside a closed area: your enterprise internal network. Regarding communication security or access rights to grant Fast2, this setup will not differ much either. One true advantage though, is the isolation of processes (your ECM on one side, Fast2 on the other). Keeping these two separated will prevent conflicts on memory usage, resources available and more.

Performance-wise

Suboption A : same machine

Installing Fast2 on the same server as one of the ECMs involved in the migration implies to run preliminary checks on potential conflicts between the two running processes. The conflicts can either be related to ports availability (even though most tools can be configured to listen and serve on different ports than their default ones, and Fast2 is no exception), or availability of physical resources.

Suboption B : same network

You don’t have to turn away from installing Fast2 close to one of your ECM. You can get the best of both worlds (benefits of the “easy-to-reach” aspect of an installation on the same machine versus independence of servers from which your processes will interoperate). This configuration offers seamless access to the source system due to close network distance, without sacrificing performance or stability of either product. One last aspect to keep in mind is the migration-proof capabilities of the server hosting the keystone of the migration : the ETL in itself. You’ll be more in position to face scalability issues on a separate server.

Ease of debugging

Having one server with the maximum of involved assets might sound appealing: easier to configure, one place only where to look for information (logs, configuration files, etc). But you can still stick to this idea by installing Fast2 on a side-server booted up on the same internal network of the ECM you are trying to reach ! The isolation of the programs on top of the tight infrastructure will definitely be worth it, once the necessity of deeper dives will arise.

Option #2 : On cloud

Architecture

The case of an installation of Fast2 in the cloud brings with it a few key advantages. You will not only experience a drastic cost reduction depending on the server provider, but scalability features and performance as well. Such upsides eventually come with a few disadvantages to take into account, depending on the confidentiality rate of the migration project. Remote installation implies remote access of both source and destination ECMs.

Performance-wise

The performance of the migration will as always be based on processing speed (workforce allocated to Fast2) and ease of communication between the storing systems. Relying on a potentially upgradable remote machine for Fast2 will indeed prevent the tool from being the limiting element all along the migration.

Ease of debugging

Since a remote installation gives Fast2 a completely isolated environment, you’ll find then the exact same advantages as installing the ETL with the on-premise but side machine architecture.

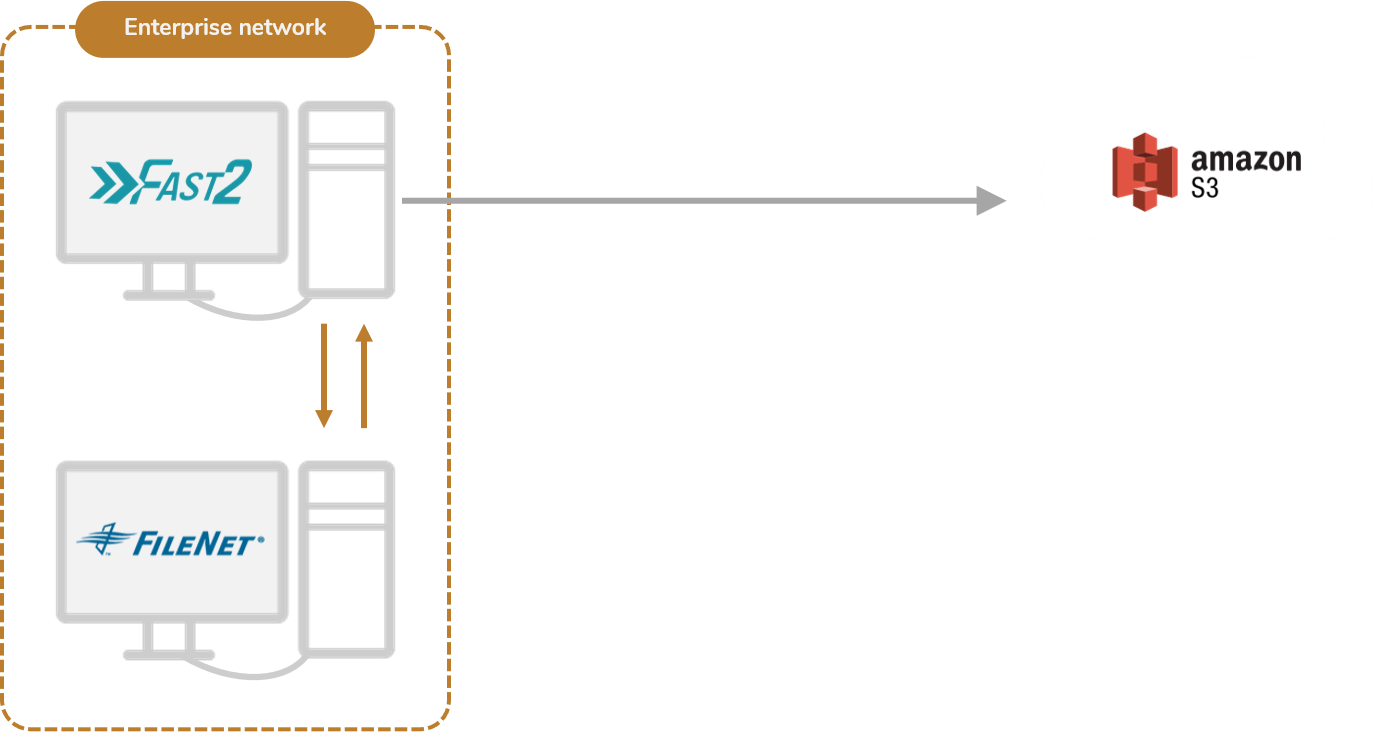

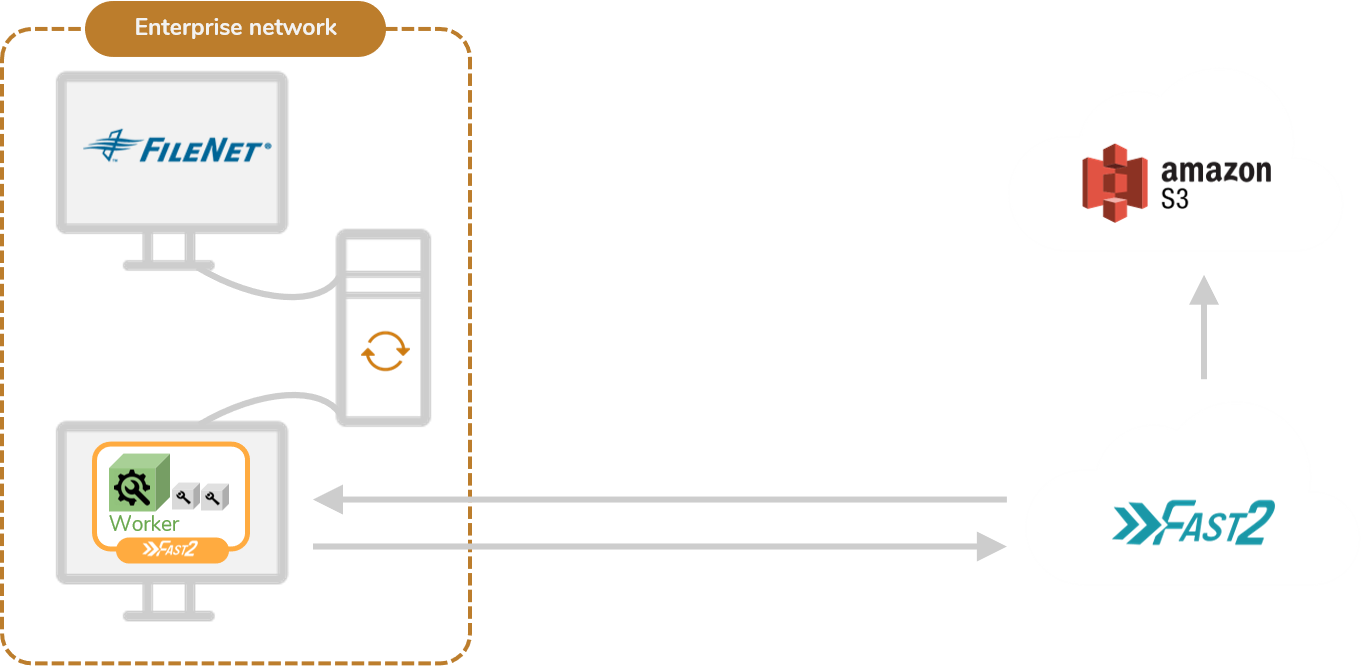

Option #3 : Hybrid

Architecture

This option takes advantage of the dual workforce repartition which Fast2 relies on :

- the broker, orchestrating the migration on one hand,

- the worker, executing the tasks on the other.

Architecturally speaking, you’ll end up with Fast2 installed on a remote machine and an additional worker installed on either the source or the destination server (even one on each). This may fulfill confidentiality requirements you’ve been asked to match (private connection allowing the worker to access the ECM, nothing is exposed outside of your network), speed up the retrieving stage of data and documents, without complicating too much any technical intervention if needed.

Performance-wise

The performances such configuration can claim are pretty decent. By freeing yourself from a bottleneck server hosting both the ETL and one of your ECMs, you also prevent ECMs reachability issues which may induce slowdowns. Delegating heavy processing to specified workers for high-volume migrations as so, will benefit your project timeline, since the extraction stage comes as one of the most resource demanding steps. It’s barely any different from the “on-premise” way of doing things.

Ease of debugging

The debugging aspect is the “go/no-go” criteria of this installation. You’ll soon be confronted with the major downside if you decide to follow that path: where to look for which info. The broker on one hand records high-level progress indicators (flow speeds, completion ratios, and more) while the workers trace migration progress at the task level. Although all logging information will be accessible from either the Explore place (gathering documents and metadata details for a given task) or Kibana, you’ll need clear knowledge about where the remote/associated workers in charge of a specific task are running. It can get slightly more complicated than the two previous options we talked about just earlier. However you’ll keep the integrity of each server, as the most resource demanding processes will be kept separated in different environments.

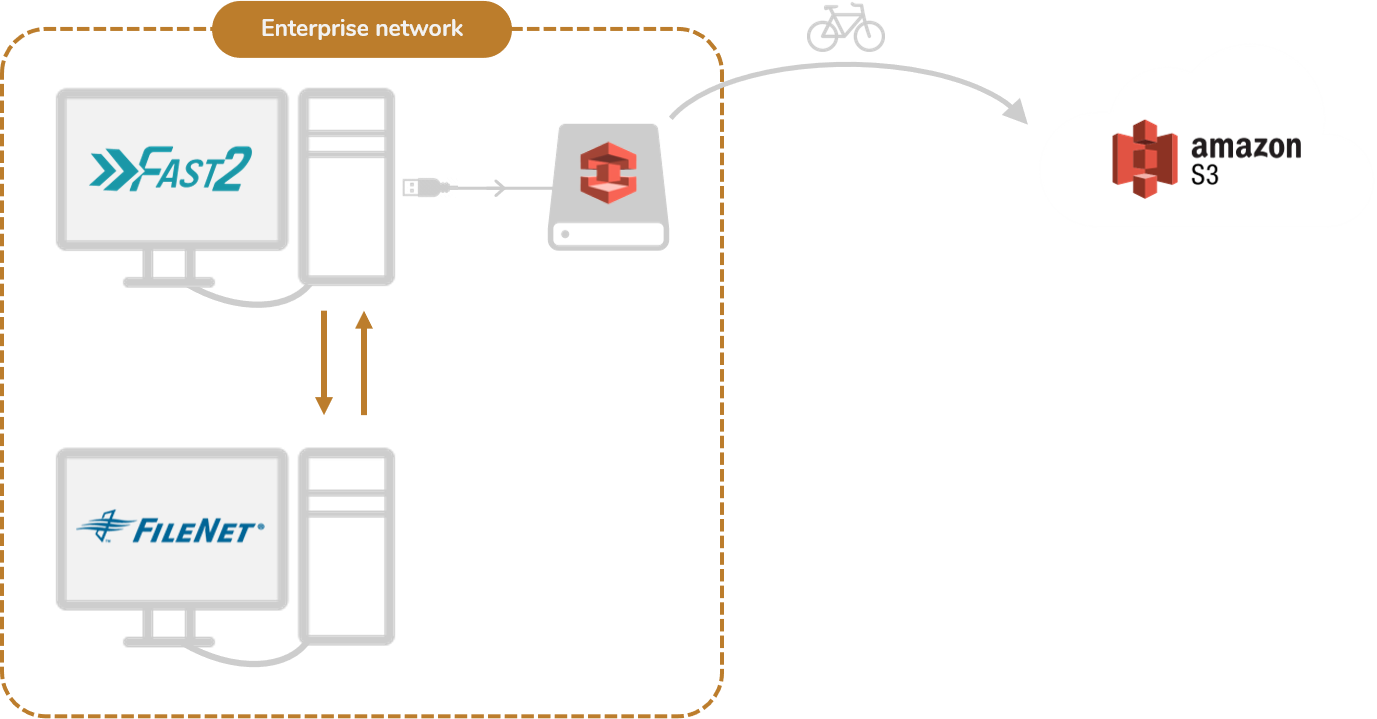

Option #4: AWS Snowball

Architecture

The last option which you might consider is to take advantage of AWS Snowball. All metadata and content transactions would then happen inside your enclosed internal network. Not only does it present the benefits of meeting confidentiality requirements, but it might as well offer more flexibility to set up and integrate. The major downside of this approach though, is the delta of “physical transfer” per say, happening at the end of Fast2’s job when the hard drive will be transported to the AWS warehouse.

Performance-wise

Since the Snowball drive will be close to both your source ECM and the Fast2 migration engine, it will allow you to drastically shorten the project timeline.

It does not differ much from an on-prem-to-on-prem design, although you’ll benefit in the end from having your target ECM in the cloud. Keep in mind the lack of scalability related to such configuration.

The major upside now will be the preservation of your bandwidth : Fast2 will migrate the data alongside with other usage of the network related to your coworkers’ projects.

Ease of debugging

Finally, the identification of which component is involved in any raised issue will be rather easy to address, since the 3 main components are completely independent of each other. Fast2 reaches the FileNet server to retrieve contents and their metadata, FileNet serves the data through its built-in API, which are then processed and transformed on the Fast2 server, before being sent over to the Snowball drive.

Let’s sum up !

Now with all these clarifications in mind, you might have a more precise idea of the way to go regarding the installation of our tool. All options do offer interesting added values! You’ll be the best judge for the case.

However if you are not fully confident about which choice suits you most, keep in mind that it’s pretty easy to switch from both the “same network” and the “remote” configurations to the hybrid architecture. That’s why you can safely commit to either option.